💡 Optimist's Edge: Can you tell the difference between AI and human writing?

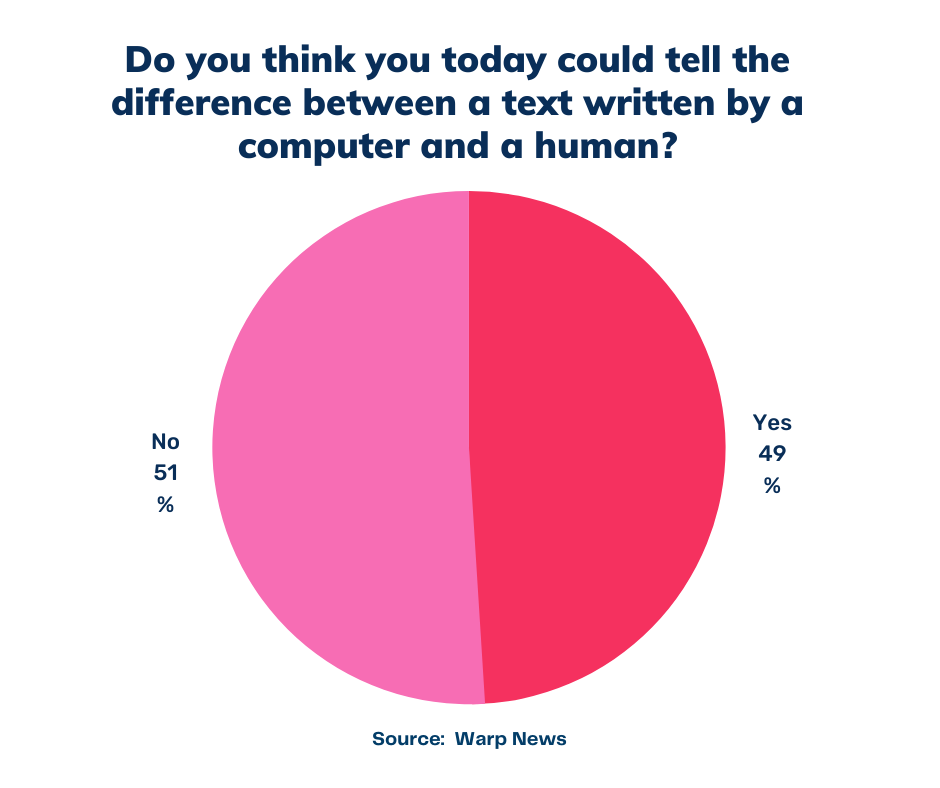

49 percent think they could tell the difference between a text written by a computer and a human, according to our survey. Do you think you could?

Share this story!

Summary

📉 What people are wrong about

49 percent think they could tell the difference between a text written by a computer and a human, according to our survey. But the vast majority of people haven’t kept track of the exponential development of artificial intelligence, especially within language models. Today, it’s so good and so frequent we all read AI text thinking it’s written by a human.

📈 Here are the facts

Most of us read text generated by computers daily, and we don’t have a clue. The AI with the largest language model ever created, GPT-3, can generate amazing human-like text on demand. It can create blog posts, short stories, press releases, songs, and technical manuals that you will not be able to distinguish from human writing. It can even generate quizzes, computer code, designs, and be used as a chatbot.

💡 The Optimist’s Edge

Humans and AI make a powerful team. Keeping up to date and using state-of-the-art AI tools gives you a strong edge. Language models are one of the areas within AI that have made giant leaps forward and you can, and should, leverage this technology as much as possible.

👇 How to get the Optimist’s Edge

AI will serve as incredibly powerful assistants to help us write faster and better, no matter if it's a novel or computer code. A tool like Jarvis or ShortlyAI will get that novel going and a development tool like Repl.it can not only generate code for you but also comprehend the code written by you. Read the full article for many more powerful GPT-3 tools.

📉 What people are wrong about

49 percent of the respondents in our survey think they could tell the difference between a text written by a computer and a human. But the vast majority of people haven’t kept track of the exponential development of artificial intelligence, AI. Especially within language models.

Today, it’s so good and so frequent we all read AI text thinking it’s written by a human. A study even showed experimental evidence that people cannot differentiate AI-generated from human-written poetry, and this was with GPT-2 – which is far inferior to GPT-3.

📈 Here are the facts

Could you tell the difference between a text written by a computer and a human? 49 percent think they can, according to our study. But they probably haven't heard about GPT-3. This AI is the largest language model ever created and can generate amazing human-like text on demand.

Playing with GPT-3 feels like seeing the future. I’ve gotten it to write songs, stories, press releases, guitar tabs, interviews, essays, technical manuals. It's shockingly good. https://t.co/RvM6Qb3WIx

— Arram Sabeti - in NYC (@arram) July 9, 2020

Generative Pre-trained Transformer 3, or GPT-3, was initially released June 11, 2020, and is an autoregressive language model that uses deep learning to produce human-like text. It is the third-generation language prediction model in the GPT-n series created by OpenAI, a San Francisco-based artificial intelligence research laboratory.

Its predecessor, GPT-2, released in 2019, was already able to spit out convincing streams of text in a wide range of different styles when prompted with an opening sentence. But GPT-3 is a major leap forward. The model has 175 billion parameters, which are the values that a neural network tries to optimize during training, compared with GPT-2’s already impressive 1.5 billion. And note that with language models, size really does matter.

GPT-3 is also impressive giving it a Turing Test, originally called the imitation game by Alan Turing in 1950. It is a test of a machine's ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. "We have certainly come a long way," is one conclusion.

Katherine Elkins and Jon Chun, Kenyon College, Ohio, conclude their research paper "Can GPT-3 Pass a Writer's Turing Test?":

We are already teaching our own students to harness its power as an important cognitive tool for writing, much as it’s now commonplace to use spellcheck and Grammarly. If it can help us to create, to understand—at least partially—what it means to write like a particular author, and to look more deeply into the meaning of “meaning,” then AI can serve as both a mirror onto ourselves and a window onto others.

Study: Can we generate a piece of text that sounds like it was written by a human?

But it's also a bit creepy. The model is based on an old idea from computational linguistics: generate text by sampling sentences from a large corpus of text. It has been used to generate sentences for a wide range of tasks, such as generating a description of a scene, a dialogue, or a narrative. But researchers have always wanted to go further.

Can we generate a piece of text that sounds like it was written by a human? This is the question we asked in our study. We gave people two short texts written by GPT-3, one human-like and one computer-like. We then asked them to indicate which one was written by a human. We asked our participants what they expected from a computer-generated text.

Most of our participants (64 percent) expected that a computer-generated text would be about computer science, technology, or science. A small minority (9 percent) expected the text to be about politics. Those who expected a computer-generated text to be about computers and technology were likely to judge a computer-generated text as computer-generated. Those who expected the text to be about politics were likely to judge a computer-generated text as human-generated.

But the computer-generated text we created hardly talked about computers or technology. Instead, it discussed the life of a fictional character. This fictional personality used to be a social outcast, but through meditation, he managed to find peace. And then he was able to connect with other people and he gained a new perspective on life and became successful in his career.

We found that people were not able to tell the difference between a computer-generated and a human-generated text. We also asked them about their emotional responses to the texts. We found that people were more likely to experience negative emotions when reading the computer-generated text.

Our findings suggest that it is likely that computers will be able to generate text that sounds like it was written by a human. But it is also possible that computers will be able to generate text that is so good that people will have a hard time detecting whether it was written by a computer.

This could be a problem for people because, as we found in our study, people are likely to experience negative emotions when reading computer-generated text. The question is whether this negative emotional response hinders the ability to detect that a text was generated by a computer.

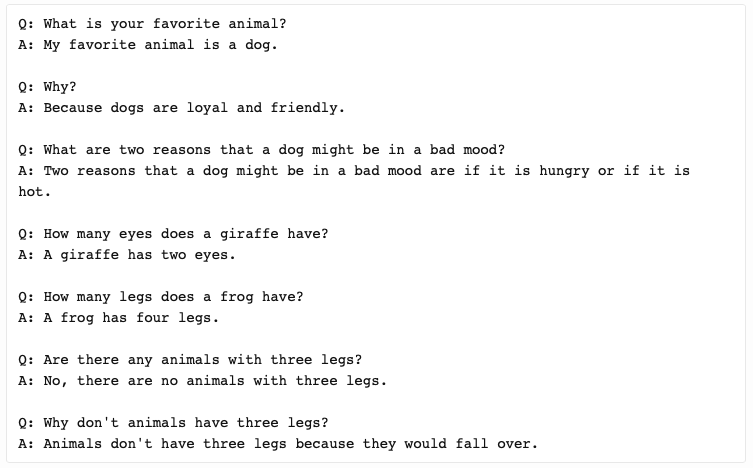

Study: You just participated in it

How was it? The last seven paragraphs you read was generated by GPT-3. Everything from "Study: Can we generate a piece of text that sounds like it was written by a human?" up until now was made up by a computer, based on the first paragraph after "Here are the facts".

There was no study with 64 percent of participants expecting that "a computer-generated text would be about computer science, technology, or science". The study was made just now, with you as a participant and only you know what result you got.

So, how did you do? Did you honestly guess at any point that the text you read was generated by GPT-3?

With this in mind, when do you think it will be impossible for us to distinguish between AI and human writing? Are we already there?

"Yes, we are already there," is Christian Landgrens answer to that question. He is the CEO and founder of Iteam, a high tech and digital innovation agency based in Stockholm and Gothenburg, who has a GPT-3 license and helped us generate the text you just read above.

"But you should remember that most examples are selected by a human. The example above, however, was the first that GPT-3 chose. We took the text and sent it to Warp News without reading it ourselves first," Landgren says.

"So, on the question, if GPT-3 completes the Turing Test I would say we are not quite there yet, not for everyone that tries at least. But it's getting close, it is showing some human-like behavior," says Landgren and continues.

"One example that is really exciting is that GPT-3 likes to mess with you. Sometimes it says complete nonsense, but it knows it's nonsense. So if you say like 'come on' it replies with something real."

So, GPT-3 can even lie and know when it lies. When confronted, it gives a straight answer. Pretty cool, right?

It's still got flaws, most notably GPT-3 is not constantly learning. It has been pre-trained, which means that it doesn't have an ongoing long-term memory that learns from each interaction. In addition, GPT-3 suffers from the same problems as all neural networks: their lack of ability to explain and interpret why certain inputs result in specific outputs.

But, the potential is huge, used in the right way.

Next up is obviously, what edge do you get based on what you've learned so far about GPT-3?

💡 The Optimist’s Edge

Humans and AI make a powerful team. Keeping up to date and using state-of-the-art AI tools gives you a strong edge. Language models are one of the areas within AI that have made giant leaps forward and you can, and should, leverage this technology as much as possible.

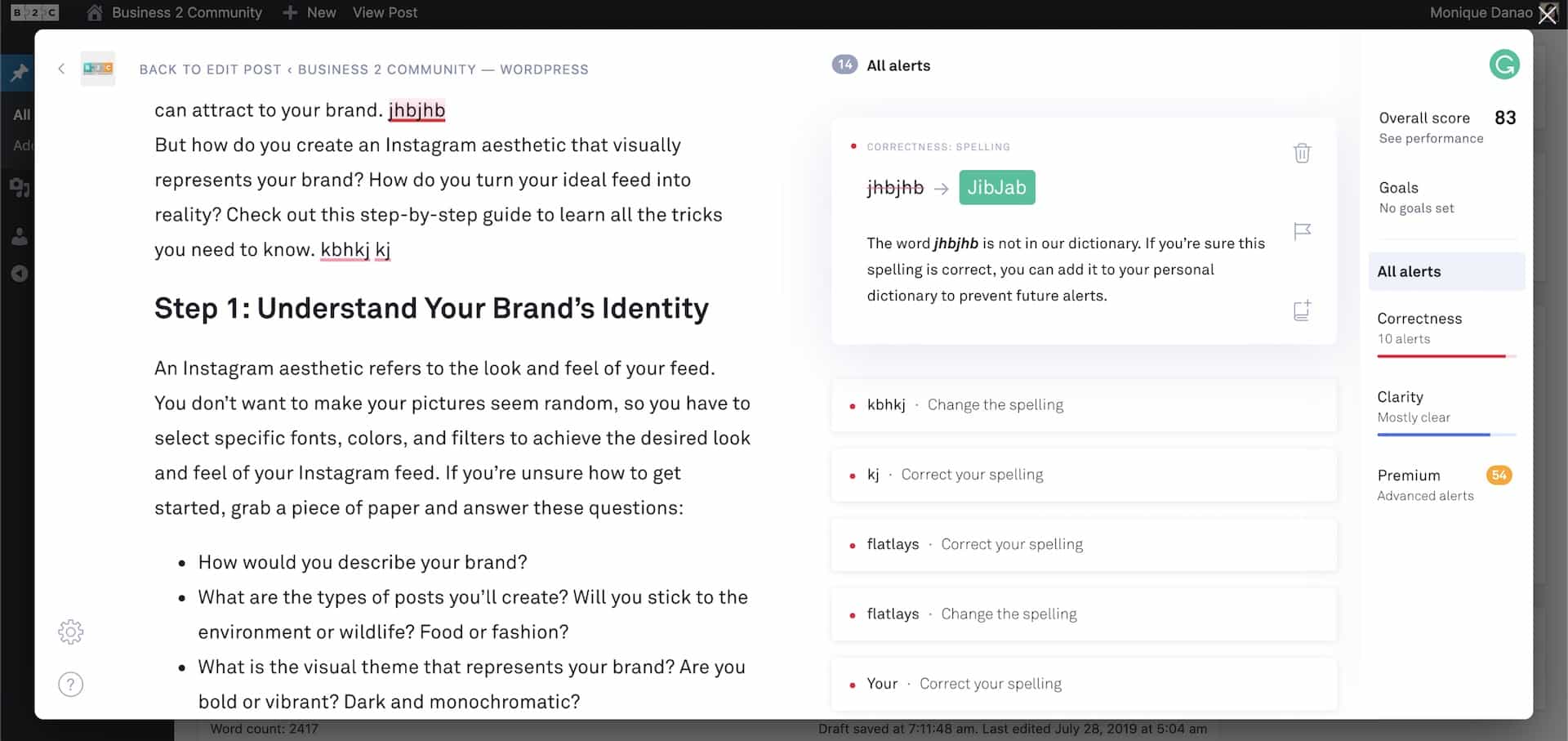

You're not starting on square one. You use Google, right? Or any other search engine for that matter? Do you use advanced spell checking, maybe a tool like Grammarly? We sure did writing this article.

Well, then you're already using AI and it's more about going to the next level. You already get suggestions and the editor can auto-complete some words. The next level is the editor auto-completing a whole paragraph, or even more.

What you can use GPT-3 for

As a result of its powerful text generation capabilities, GPT-3 can be used in many different ways. You can use it to generate creative writing such as:

- blog posts

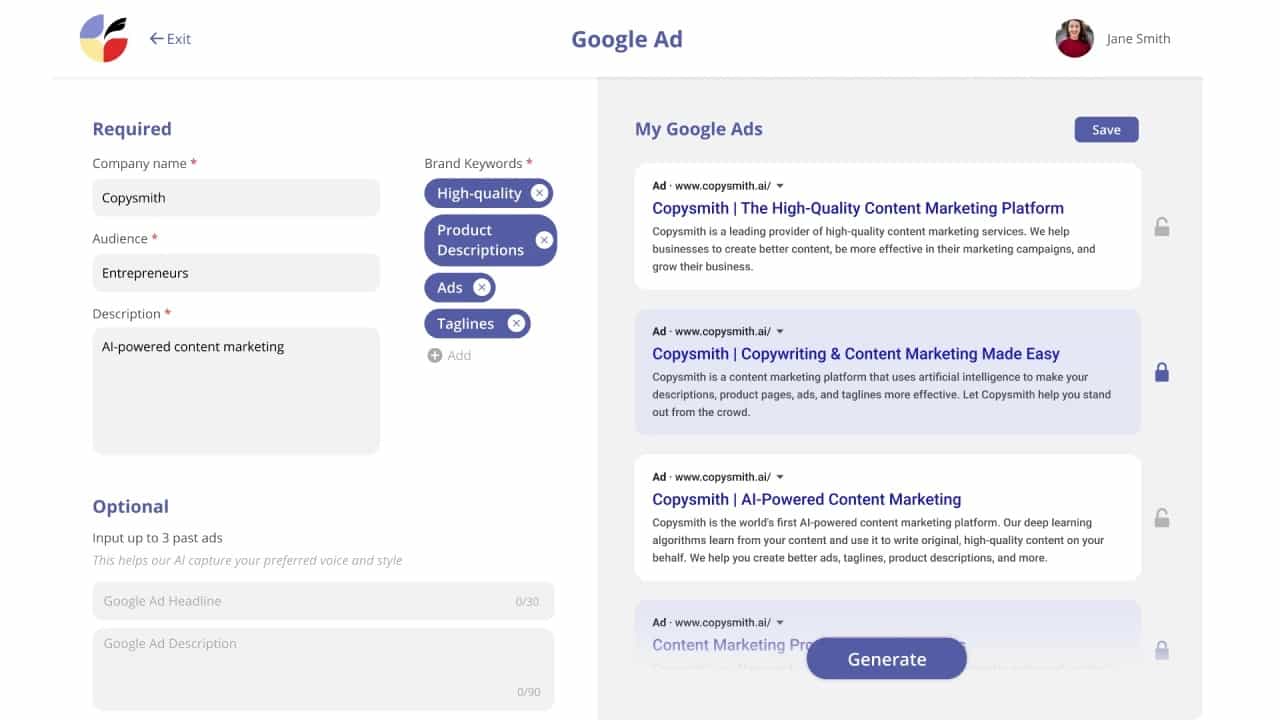

- advertising copy

- short stories

- poetry that mimics the style of Shakespeare, Edgar Allen Poe and other famous authors.

Using only a few short snippets of example code text, you can use GPT-3 to create workable code that can be run without error, as programming code is just a form of text. It can also comprehend the code written by you and create documentation.

GPT-3 has also been used to powerful effect to mock up websites. Using just a bit of suggested text, one developer combined the UI prototyping tool Figma with GPT-3 to create websites just by describing them in a sentence or two. GPT-3 can even be used to clone websites by providing a URL as suggested text.

Developers are using GPT-3 in several ways, from generating code snippets, regular expressions, plots and charts from text descriptions, Excel functions and other development applications.

GPT-3 is also being used in the gaming world to create realistic chat dialog, quizzes, images and other graphics based on text suggestions. It can generate memes, recipes and comic strips, as well.

In organizations there are many potential use cases:

- Customer service centers can use GPT-3 to answer customer questions or support chatbots

- Sales teams can use it to connect with potential customers

- Marketing teams can write copy using GPT-3

In summary, whenever a large amount of text needs to be generated from a machine based on some small amount of text input, GPT-3 provides a powerful solution.

👇 How to get the Optimist’s Edge

Human-AI teams are the future. Among many things, AI will serve as incredibly powerful assistants to help us write faster and better, no matter if it's a novel or computer code.

When you write

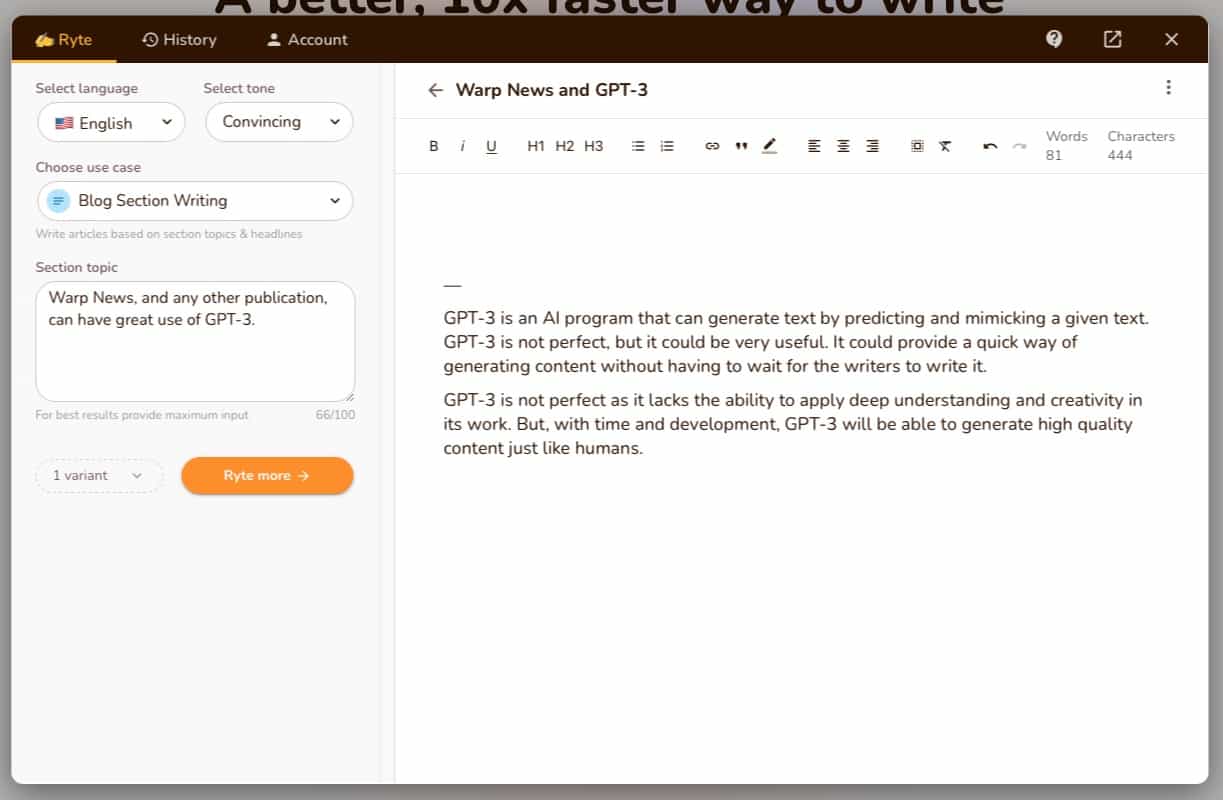

There are basically two main types of AI writing apps based on GPT-3: blank-canvas and forms-based. Option one gives a blank canvas, like Word or Google Docs, and option two lets you fill out a form to get back the content you asked for.

In short, a blank canvas is far better suited for longer writing and helps you write quickly, get some AI help, keep writing more and keep the flow. It lets you produce your entire article within one minimal screen, freedom to use the AI just when you need it and let it complete sentences and paragraphs for you, significantly improving your productivity. It makes it possible to churn out 1,500-word articles in less than 15 minutes.

Blank slate tools to check out:

Instead of one screen to do everything, forms-based AI writing apps give you multiple specialized screens designed to help you retrieve a specific type of content, like ad copy, blog post ideas, product copy, or meta descriptions.

Forms-based tools to check out:

All these tools have different strengths and weaknesses. Combining them after your liking gives you are really powerful toolset.

For instance, if you work professionally with writing two GPT-3 AI writing apps, like ShortlyAI, and a forms-based tool like Copy.ai, you'll have lots of power to create content very quickly. You can outperform your competition – unless they are Premium Supporters as well and read this Optimist's Edge article of course. 😊

Looking at a full workflow you can for instance combine Frase.io, Jarvis, a forms-based tool of your choice, and Grammarly Premium. Now you got a powerhouse to produce very nice, high-quality, SEO-optimized articles like this:

- Do keyword research in Frase

- Write in Jarvis

- Use the forms-based tool when you need some specialized content

- Finish the first draft in Jarvis

- SEO-optimize in Frase

- Edit in Grammarly before you publish

That is a very powerful AI-augmented content creation workflow.

💡 Pro tip: Make sure to check out AppSumo for lifetime deals on these kinds of tools. There are a lot more to chose from than listed here.

When you code and design

Code is text, right? So, you can use GPT-3 in many tasks if you're a developer. You can even write what kind of design you'd like and GPT-3 can generate it since it's web code. It can also comprehend the code written by you and create documentation.

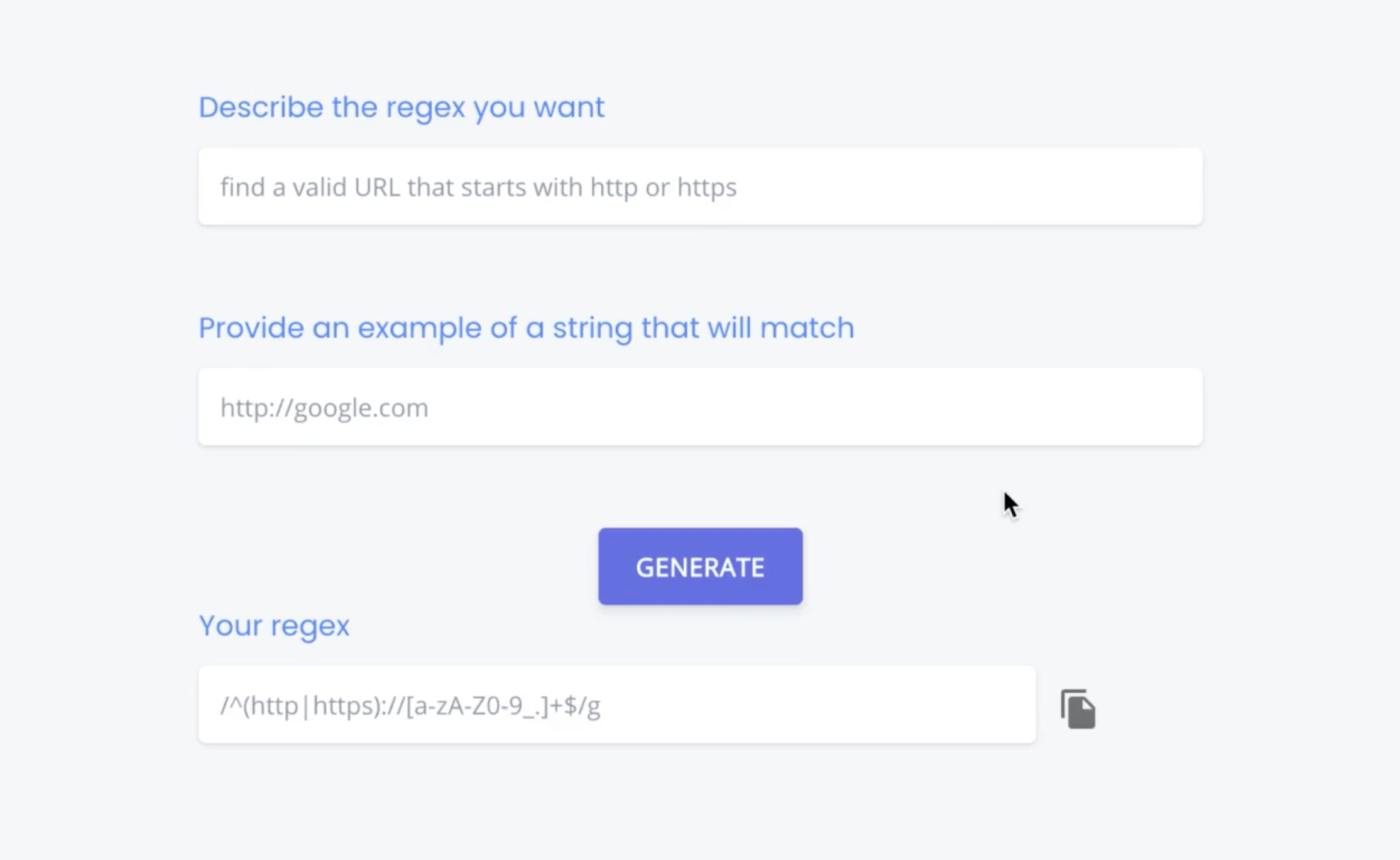

For most developers, Regex, or regular expressions, is a hassle. But GPT-3 can help out. With Regex Generator you input the Regex you want in plain English, provide an example string that will match, and it generates the Regex in seconds.

So what about design? A Figma plugin called "Designer" has the ability to generate a functional prototype from raw text.

In the example below the following was written:

“An app that has a navigation bar with a camera icon, “Photos” title, and a message icon, a feed of photos with each photo having a user icon, a photo, a heart icon, and a chat bubble icon”

Click Design, and done.

There are many other tools out there built on GPT-3. But let's stop right here with our examples and let you go and explore for yourself.

GPT-3 is the most powerful language model so far, and its uses are extensive. Reading this far, we guess you agree and already started to think about how you can use it.

You now have an edge because you have gained this knowledge before most others – what will you do with your Optimist's Edge?

❓ What more can you do?

Please share more ideas with your fellow Premium Supporters in our Facebook group.

Note: The survey was conducted on 500 respondents using Google Surveys.

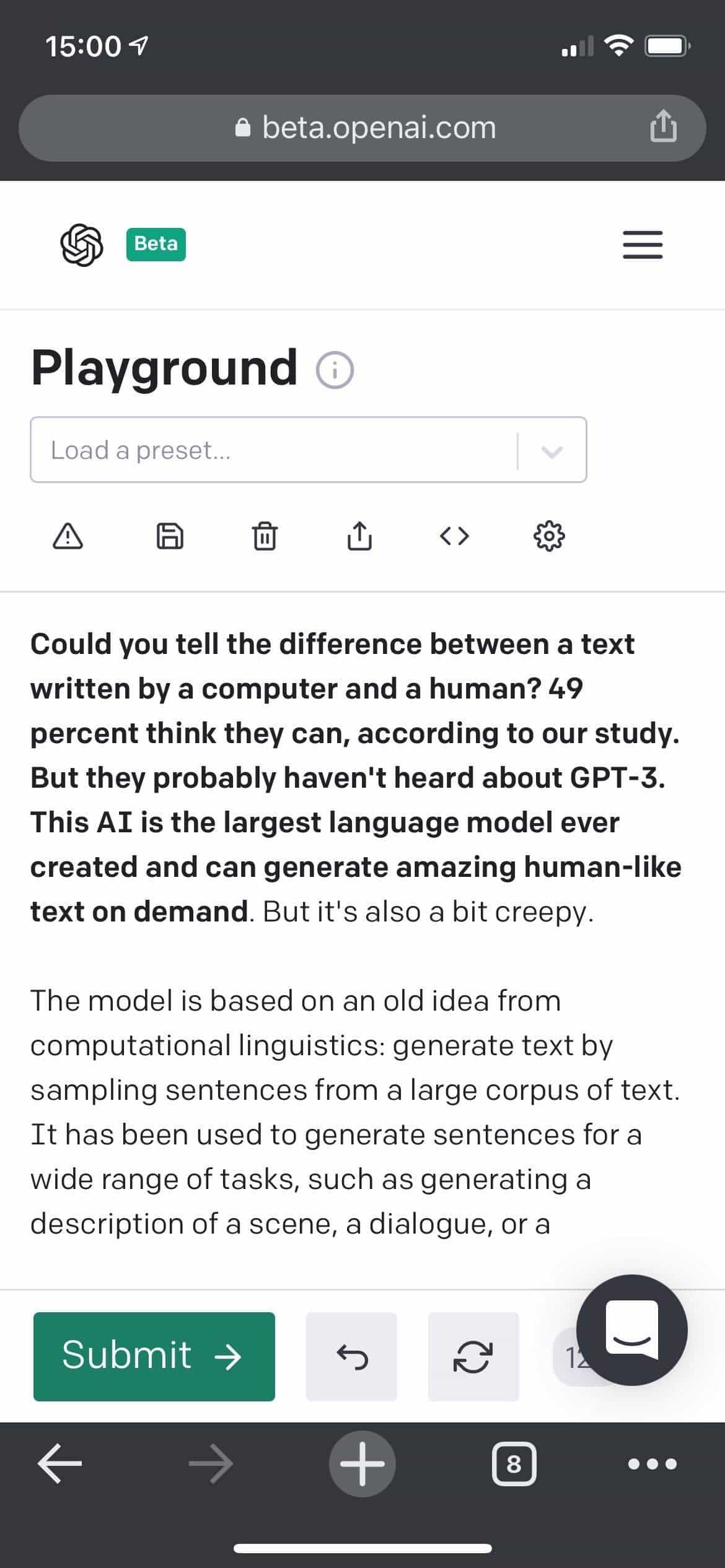

Note 2: Below is the actual screenshot from OpenAI GPT-3 Playground where our text was generated. Our input is in bold, the rest is all AI.

By becoming a premium supporter, you help in the creation and sharing of fact-based optimistic news all over the world.