💡 Musings of the Angry Optimist: If we'd regulated the internet, as we're regulating AI

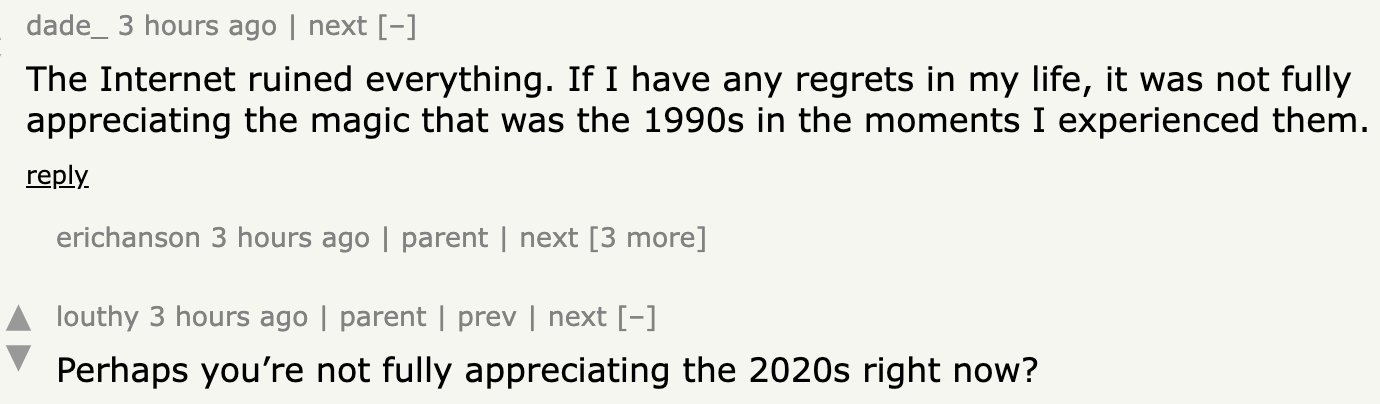

President Biden's AI regulation is vague and at the same time EXTREMELY specific. If we had regulated the internet in the same way, it would have seriously hampered our development. Also: We reveal which movie Biden watched that influenced his determination to regulate AI.

Share this story!

My thoughts, tips, and other tidbits that I believe are suited for a fact-based optimist. This newsletter is for you who are a Premium Supporter at Warp News. Feel free to share it with friends and acquaintances.

⚖️ Destructive AI regulations

Last week, world leaders, AI scientists, and other AI people met at Bletchley Park for the AI Safety Summit, at the invitation of the UK Prime Minister, Rishi Sunak.

The outcome from there wasn’t as bad as it could have been. Some non-binding documents were signed, mostly free from exaggerated fears of human extinction.

But the day before the summit, President Biden signed an executive order that regulates AI. It’s a strange order. In parts quite vague, then incredibly specific. It says:

i) any model that was trained using a quantity of computing power greater than 10^26 integer or floating-point operations, or using primarily biological sequence data and using a quantity of computing power greater than 10^23 integer or floating-point operations; and

(ii) any computing cluster that has a set of machines physically co-located in a single datacenter, transitively connected by data center networking of over 100 Gbit/s, and having a theoretical maximum computing capacity of 10^20 integer or floating-point operations per second for training AI.

Imagine if in 1996 we had regulated the internet in a similar way:

"Any organizations that have more than 150 servers, each at least 15GB, interconnected in a network must..."

or

"Any organizations that are connected to a network with at least 10 Mbit/s..."

This is how strange it gets when you don’t know what should be regulated, but still have decided to regulate "AI". Because the outcomes from AI are already regulated. For example, you are not allowed to use AI to scam people, just as you cannot use paper and pen.

A point I developed last week in this text:

Warp NewsMathias Sundin

Warp NewsMathias Sundin

AI has caused few real problems and no societal problems. There is nothing to regulate. Existential risk is built on guesses, and we should not regulate based on guesses. When problems related to AI arise (because they will) then we can regulate those then.

If we had regulated the internet in the same way that Biden is now trying to regulate AI, there would have been a significant risk that the internet would have had much less impact and thus hampered human development. The internet is of course not problem-free, but the positive greatly outweighs the negative.

Others have written well about this:

Ben Evans:

The hardest problem, though, is how policy can handle the idea of AI, or rather AGI, as an existential risk itself. The challenge even in talking about this, let along regulating it, is that we lack any theoretical model for what our own intelligence is, nor the intelligence of other creatures, nor what machine general intelligence might be.

You can write a rule about model size, but you don’t know if you’ve picked the right size, nor what that means: you can try to regulate open source, but you can’t stop the spread of these models any more than the Church could stop Luther’s works spreading, even if he led half of Christendom to damnation.

Steven Sinofsky:

What we do know is that we are at the very earliest stages. We simply have no in-market products, and that means no in-market problems, upon which to base such concerns of fear and need to “govern” regulation.

Alarmists or “existentialists” say they have enough evidence. If that’s the case then then so be it, but then the only way to truly make that case is to embark on the legislative process and use democracy to validate those concerns.

I just know that we have plenty of past evidence that every technology has come with its alarmists and concerns and somehow optimism prevailed. Why should the pessimists prevail now?

Ben Thompson (responding to Sinofsky):

They should not. We should accelerate innovation, not attenuate it. Innovation — technology, broadly speaking — is the only way to grow the pie, and to solve the problems we face that actually exist in any sort of knowable way, from climate change to China, from pandemics to poverty, and from diseases to demographics.

To attack the solution is denialism at best, outright sabotage at worst. Indeed, the shoggoth to fear is our societal sclerosis seeking to drag the most exciting new technology in years into an innovation anti-pattern.

Mathias Sundin

Arge optimisten

❗ Of interest..?

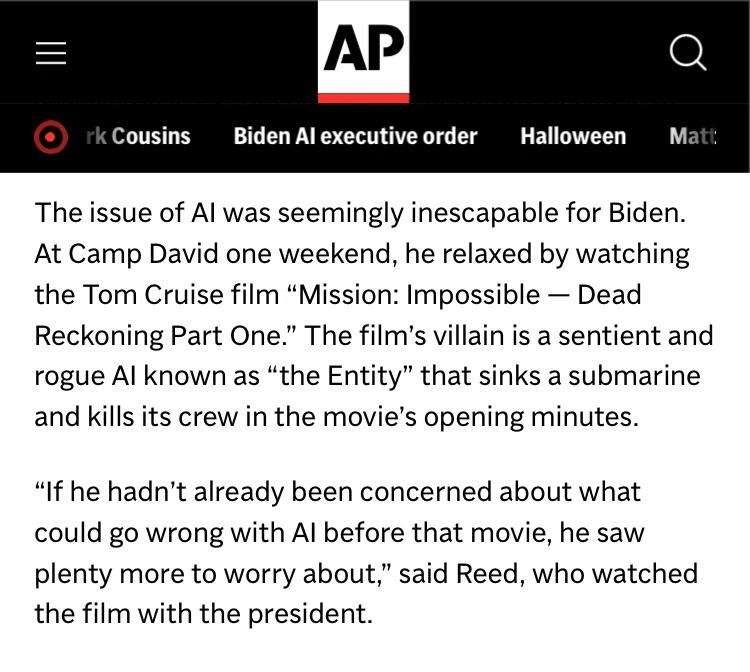

🤔 Good point

🤦 Inspiration for Biden's AI decision

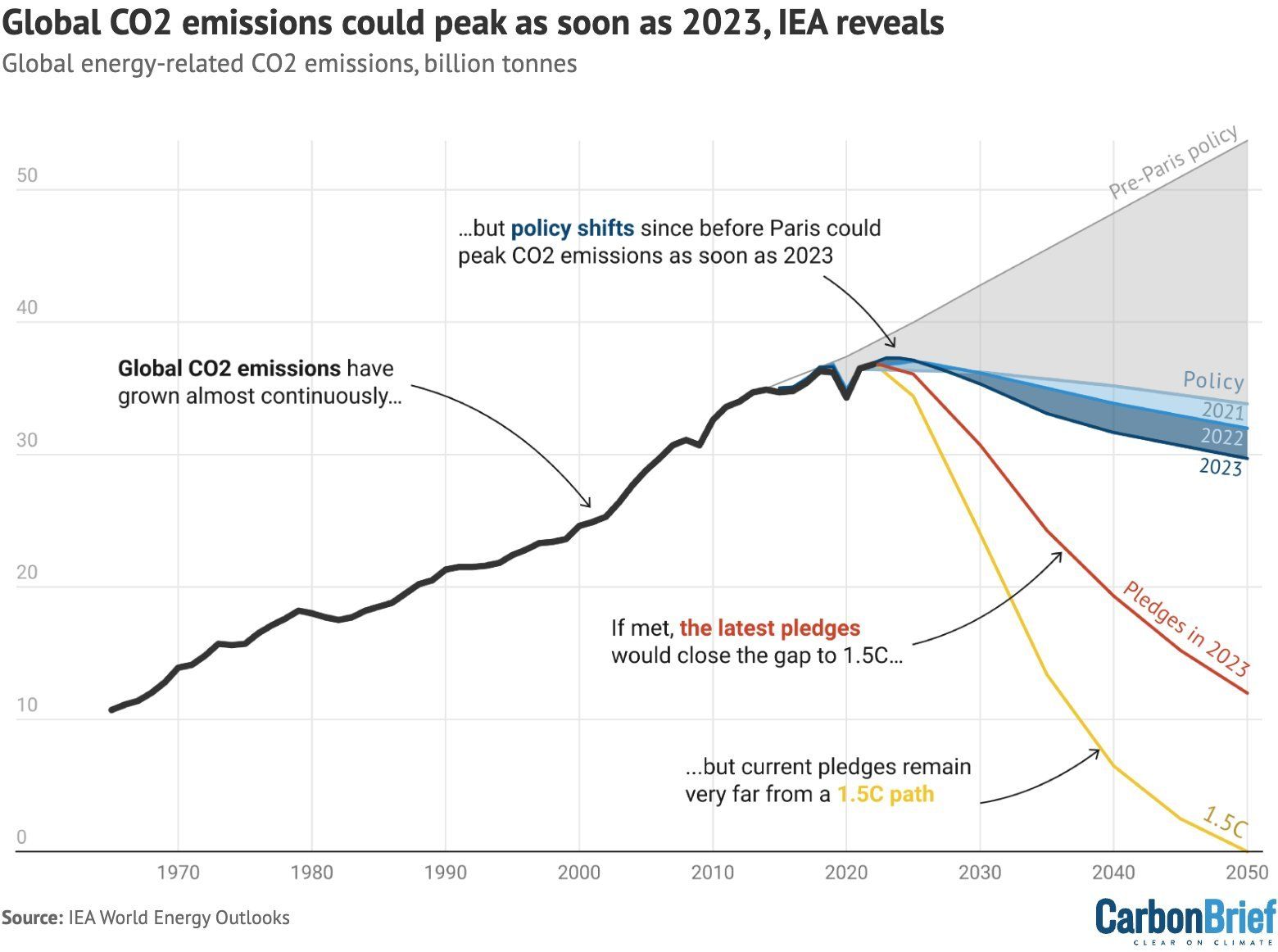

📉 Peak CO2 soon?

Mathias Sundin

Arge optimisten

By becoming a premium supporter, you help in the creation and sharing of fact-based optimistic news all over the world.